Finally it is time

So it has been… Let me check… 2+ years.. Time for a update on the harvester experiment. Well to be honest most of the time I have actually used proxmox for most of the time but at the end of last year I finally fully switched.

So I am currently running a chinese x99 motherboard with a Xeon and 64 GB Mem.

Works great except for allot things but hey it can always be worse, it works and performace is actually quite decent once in a while.

So we are going through a couple of thngs, what is good? what is bad? And will I stay with it as my hypervisor of choice?

This won’t be a technical deep dive, maybe I will do that in the future but for now it is just a overview.

The good The Bado and the ugly

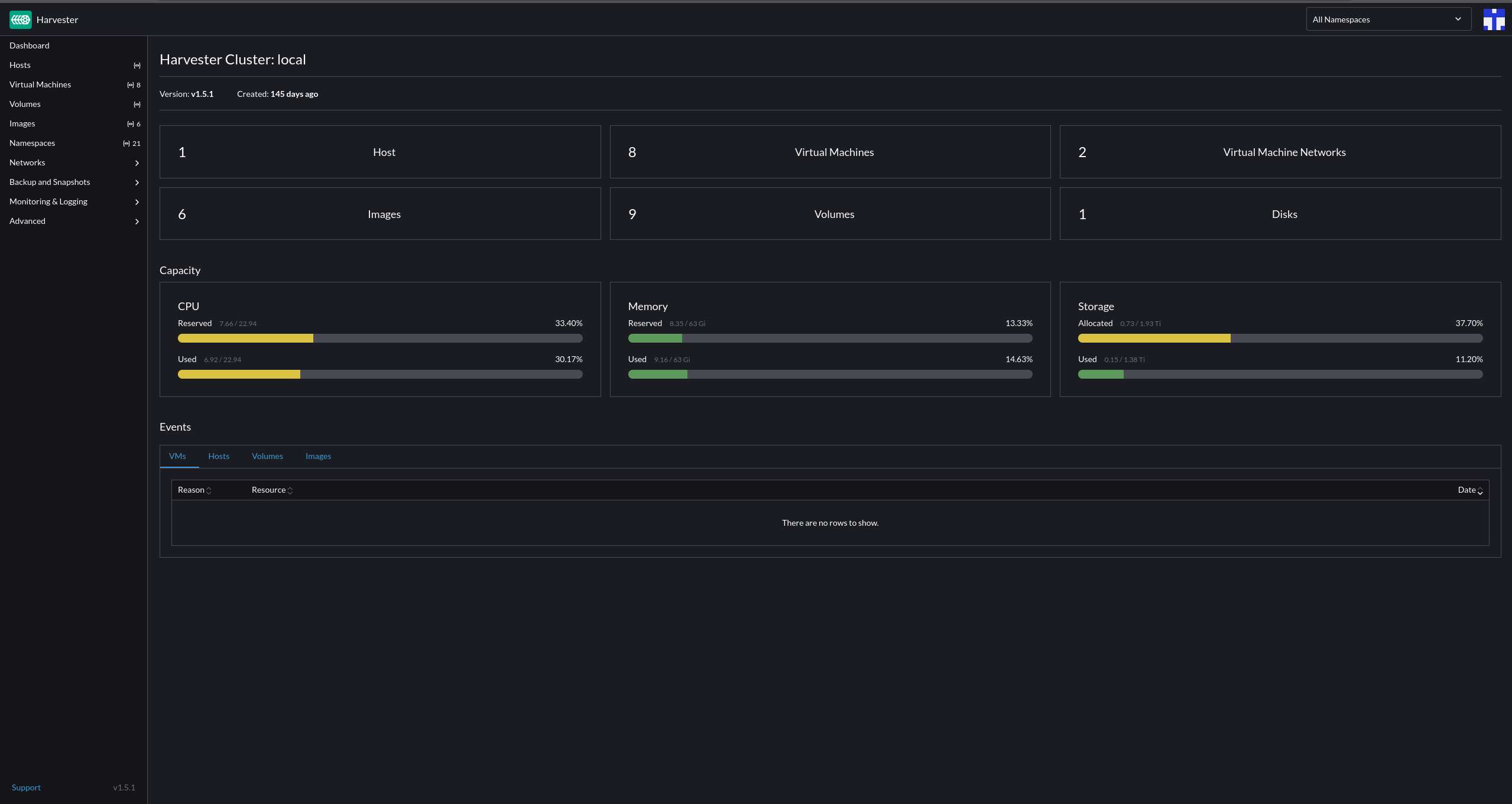

So what has been good after using harvester for 5+ months? Well actually quite allot of things, it is pretty fast, they way of working makes sense after a while and intergrated grafana is a nice bonus. But going through it all is allot of work and I am a bit lazy, so what I will do is to list the items that I found pretty cool and working good. Also I will list and point out the items that I found less good and also a conclusion if I will be sticking with it or not.

The good

So what is good about harvester? Well quite allot actually.

- It is pretty fast overall

- Intergrated Grafana & prometheus!

- Nice host overview of all the different hosts ( if you have any at least )

- Namespaces and more of a kubernetes way of working

- Pretty expanded API

- Good documentation

So the list is pretty big and I won’t touch everything but I will do my best.

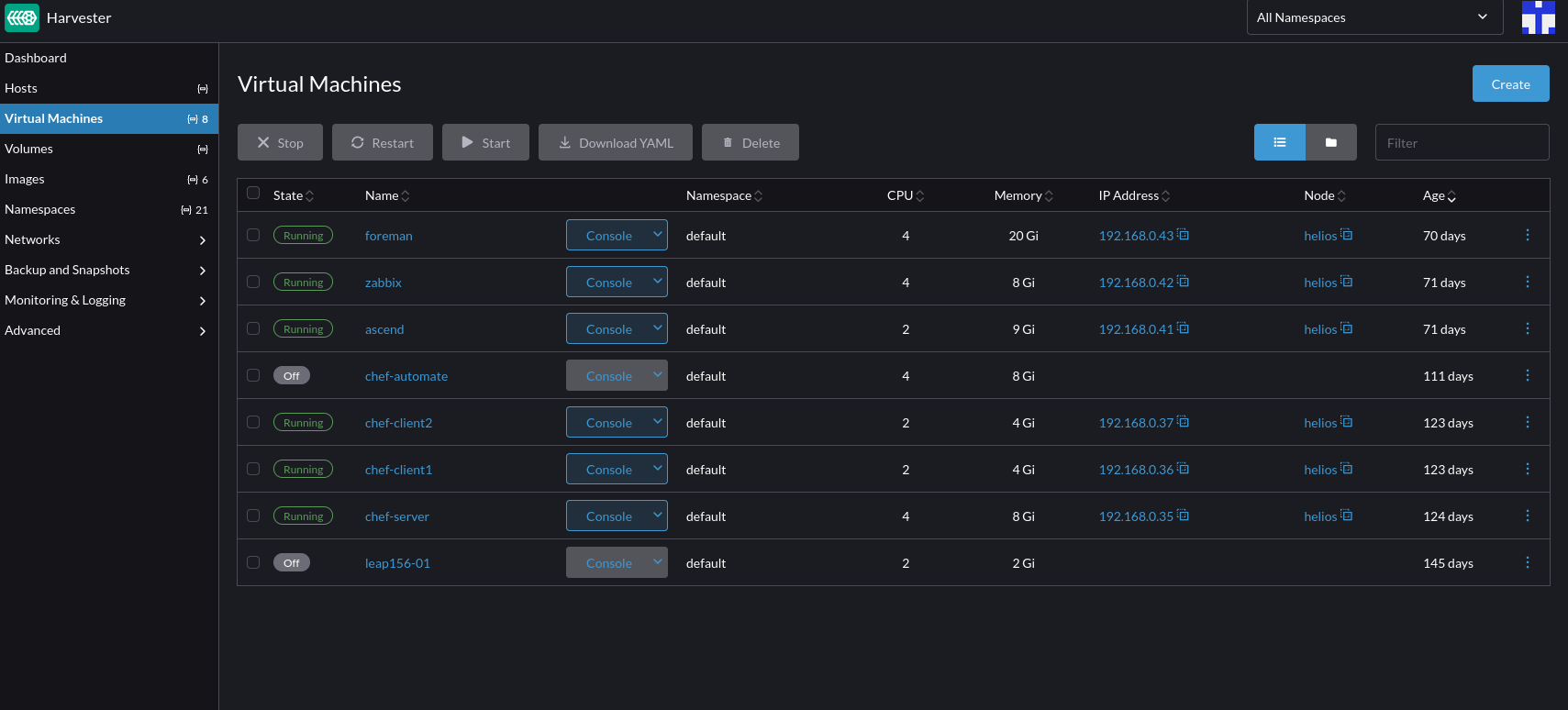

SPEED AND POWER

So the speed the system is not bad at all, overall everything works smooth. It is running on my main node that has the following specs: Chinese x99 motherboard with 64GG of mem and a Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz. Nothing to special, I added some extra nics to it but it has been working stable. I don’t run allot of machines but the machines that I run require most of the times more memory then cpu so I have more then enough overall.

The overview is nice and it works good, speed wise the vms work like they should and I haven’t noticed any stutters or what so ever. Allocation of resources also works correctly and networking wise everything works as it should be.

Volumes, images, and networking So much to see

Every vm ofcourse gets a volume for every disk that it has. It all creates a pv and a pvc for each of the items. Works pretty smooth and I never have to use anything with kubectl. Images tab contains all the images you download, ISO’s vm images for example and so on.

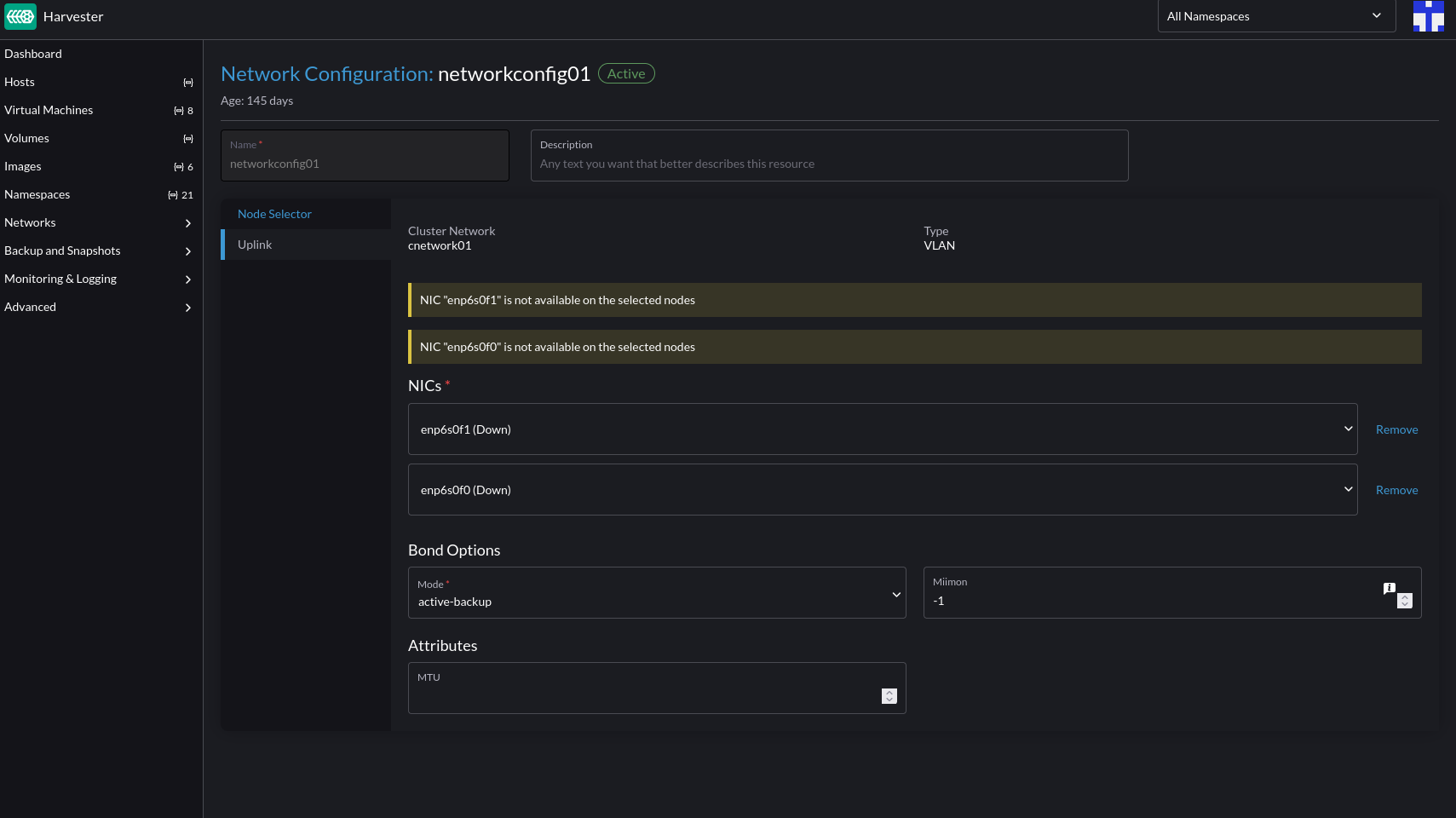

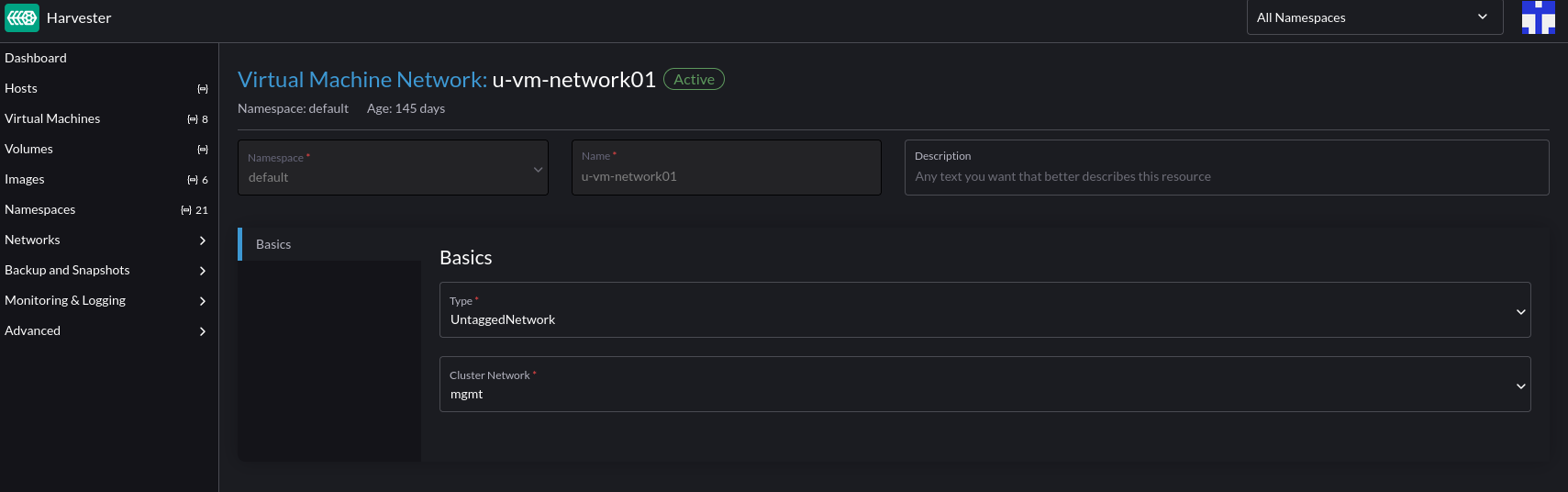

The network is a bit more different but easy to use. You can create a cluster network and then dedicate a nic or nics to that network.

After you have created a cluster network then you can create a Virtual machine network. That will then be used by your vms.

You can also have load balancers and iP Pools but I haven’t used those parts so can’t say anything about that.

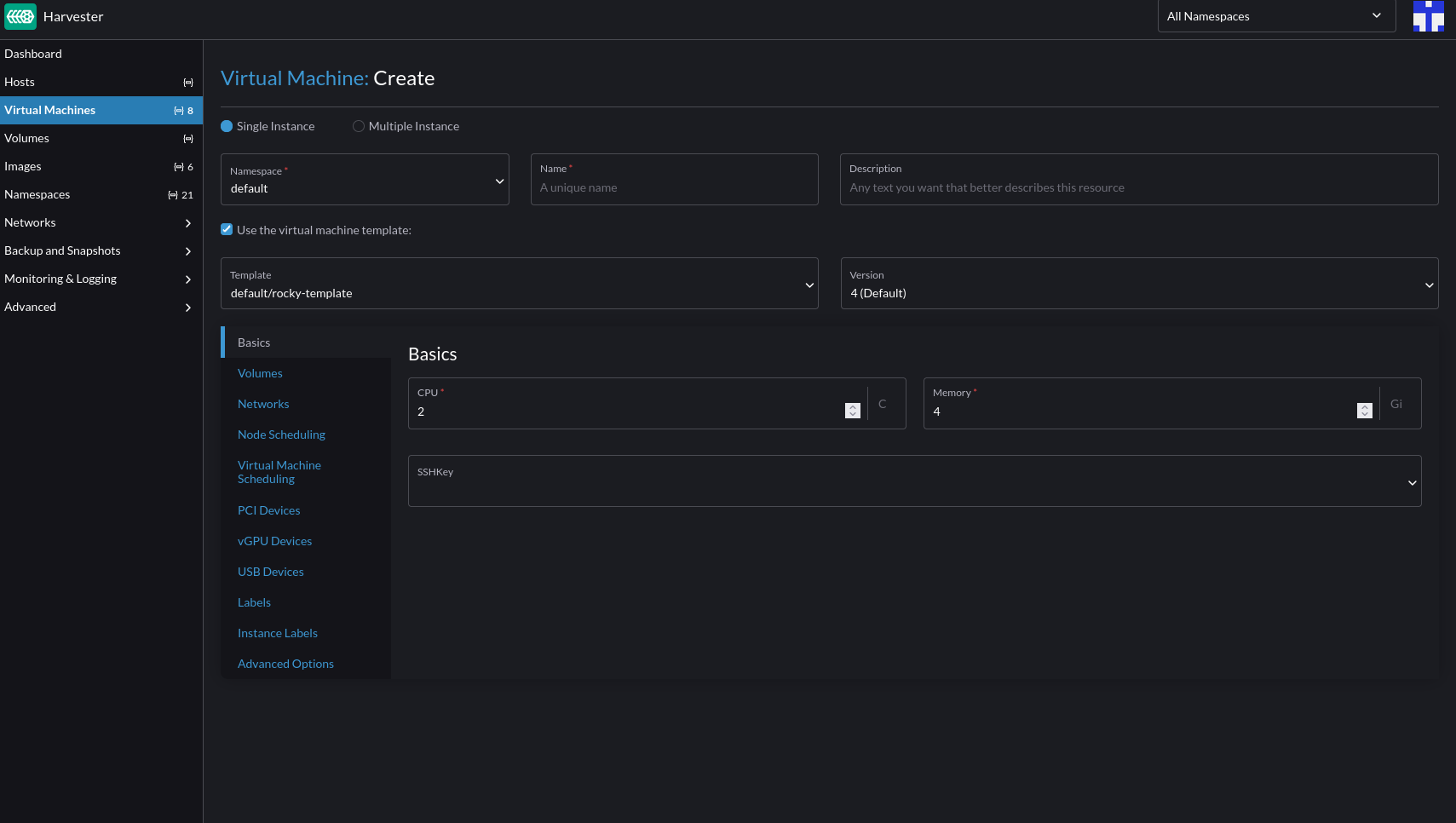

Creating a VM & VM template, something a bit different

If you want to make your life more easy you look into automating stuff ofcourse and with harvester you would need to take a look at templates. As it states you create a template to then further use when you make a VM, within that template you specify cpu and memory and which network to use. Then at the advanced option you can use cloud init to then further configure the VM. This requires a bit further config in the beginning for the VM since cloud-init is required.

Example of the user data:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

#cloud-config

package_update: true

packages:

- qemu-guest-agent

- sudo

runcmd:

- - systemctl

- enable

- --now

- qemu-guest-agent.service

ssh:

install-server: true

allow-pw: true

ssh_quiet_keygen: true

allow_public_ssh_keys: true

timezone: Europe/Amsterdam

users:

- name: jeffrey

groups: [ adm, sudo ]

lock-passwd: false

sudo: ALL=(ALL) NOPASSWD:ALL

shell: /bin/bash

ssh_authorized_keys:

Network data:

1

2

3

4

5

6

7

8

9

10

11

network:

version: 2

ethernets:

eth0:

addresses:

- 192.168.0.X/24

gateway4: 192.168.0.1

nameservers:

search: [jplace.lan, bplace.nl]

addresses: [192.168.0.1]

When you then press create VM and select the use the virtual machine template then it will auto populate all the items specified in a template

A very nice thing about the templating and the usage it does is that it keeps versions! This helps allot when you are trying stuff or just when you want the version history.

The bad

So it isn’t all just nice things sadly with harvester, overall I am happy about it but there are some points that are just irritating.

From a homelab perspective

So from a homelab perspective it isn’t the best hypervisor to have, it is pretty complicated overall and quite kubernetes minded. They took rancher and built allot more out of it. But since it is quite difficult I can’t really see it taking a good place in the homelab scene.

A closed OS

The os it self is closed off mostly, it has SUSE Linux Enterprise Micro 5.5 at its base. It isn’t a OS that opens up to you and lets you do everything and install everything by default. It isn’t the worst thing since you of course want your hypervisor to be stable but from a person perspective that like to dig and install what I want whenever I want it is a bit of a minus point. BUT it is stable as hell so kudo’s to SUSE for that!

RTFM allot

So it isn’t the most easy Hypervisor to use or to find everything you want to know. Allot of RTFM is needed, is it bad? No it is actually pretty good documentation that SUSE wrote but out of the box experience it is a challange sometimes. So RTFM is not really the downside it is more the ease of use and finding everything.

So the conclusion

I wrote quite allot for a small blog post but lets wrap it up a bit and go to a conclusion.

I didn’t much go into the grafana and prometheus part of the harvester install but that is cause I broke it with testing stuff and deleting the wrong pv and pvc. So my issue and my bad nothing to do with harvester. That does a great job.

So is it the next big hypervisor to use or watch out for?

Well, how I see it. For exisiting installs that has a hypervisor already… No… UNLESS you come from vmware. Then for sure run a poc and try it out. Especially if you have multiple nodes and can use longhorn and all the features correctly for sure it is a good option.

For homelabs, smaller workloads and so on. I would say use proxmox instead. It has more options and stability for more hardware and the requirements are quite allot less.

It for sure has potential and it is a nice change in the current hypervisor scene to see a new big player going.

Will I stay then on harvester as Hypervisor?

That is the big question ofcourse. For now after quite a while with it I am doubting.

Couple of points for me are:

- Packer support not really working/unkown. This is something that would take more time to investigate

- Not enough knowledge from my side on the kubernetes side.

- Closed off baseOS, This can be nice but also be a issue. For me I would like to see it a bit more open/ a “traditional OS”

- Updates take a long time and update process is a bit more work. This can be my issue but updating harvester from 1.4 To 1.5 took quite some time and figuring it out.

But also it is a cool to use hypervisor and I learned ALLOT about longhorn kubernetes and just how a different hypervisor works. I will keep longhorn on my main node for now. But when I get a new 2u case I will most likely reinstall it with proxmox and see if the kernel issues that caused me to switch have been solved.

Thats all folks

Well that was all and most likely not allot of people will read it or find it interesting but I don’t care.

If you do find it interesting or learned something from it then please share it!

Next up I will be working a bit more on uyuni and I plan to make some youtube videos about some items on how to configure it. Also working on updating my ansible modules and create some more maybe in the future.